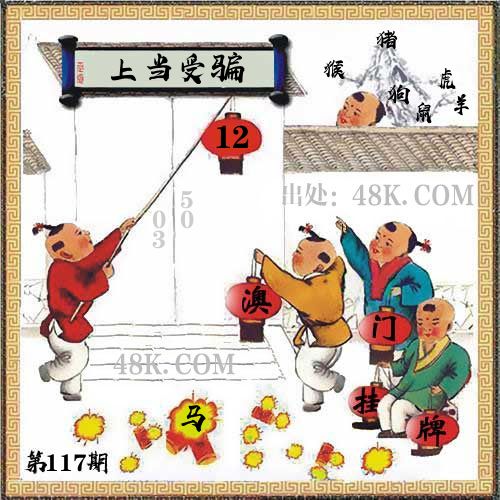

117期:澳门天天好彩正版挂牌更多

|

117期 | |

|---|---|---|

| 挂牌 | 12 | |

| 火烧 | 马 | |

| 横批 | 上当受骗 | |

| 门数 | 03,05 | |

| 六肖 | 猪猴虎狗羊鼠 | |

澳门挂牌解析

2024-117期正版彩图挂:12;挂牌:上当受骗;六肖:猪猴虎狗羊鼠;火烧:马

解析:【出自】:陆文夫《微弱的光》:“这九年也没有完全浪费,思考了不少问题,不再那么容易上当受骗。”【示例】:刘心武《钟鼓楼》第五章:“他确实感到上当受骗了。”

解释:因信假为真而被欺骗、吃亏。

综合取肖:蛇鸡羊猴狗鼠

解析:【出自】:陆文夫《微弱的光》:“这九年也没有完全浪费,思考了不少问题,不再那么容易上当受骗。”【示例】:刘心武《钟鼓楼》第五章:“他确实感到上当受骗了。”

解释:因信假为真而被欺骗、吃亏。

综合取肖:蛇鸡羊猴狗鼠

澳门精华区

香港精华区

- 117期:【贴身侍从】必中双波 已公开

- 117期:【过路友人】一码中特 已公开

- 117期:【熬出头儿】绝杀两肖 已公开

- 117期:【匆匆一见】稳杀5码 已公开

- 117期:【风尘满身】绝杀①尾 已公开

- 117期:【秋冬冗长】禁二合数 已公开

- 117期:【三分酒意】绝杀一头 已公开

- 117期:【最爱自己】必出24码 已公开

- 117期:【猫三狗四】绝杀一段 已公开

- 117期:【白衫学长】绝杀一肖 已公开

- 117期:【满目河山】双波中 已公开

- 117期:【寥若星辰】特码3行 已公开

- 117期:【凡间来客】七尾中特 已公开

- 117期:【川岛出逃】双波中特 已公开

- 117期:【一吻成瘾】实力五肖 已公开

- 117期:【初心依旧】绝杀四肖 已公开

- 117期:【真知灼见】7肖中特 已公开

- 117期:【四虎归山】特码单双 已公开

- 117期:【夜晚归客】八肖选 已公开

- 117期:【夏日奇遇】稳杀二尾 已公开

- 117期:【感慨人生】平特一肖 已公开

- 117期:【回忆往事】男女中特 已公开

- 117期:【疯狂一夜】单双中特 已公开

- 117期:【道士出山】绝杀二肖 已公开

- 117期:【相逢一笑】六肖中特 已公开

- 117期:【两只老虎】绝杀半波 已公开

- 117期:【无地自容】绝杀三肖 已公开

- 117期:【凉亭相遇】六肖中 已公开

- 117期:【我本闲凉】稳杀12码 已公开

- 117期:【兴趣部落】必中波色 已公开

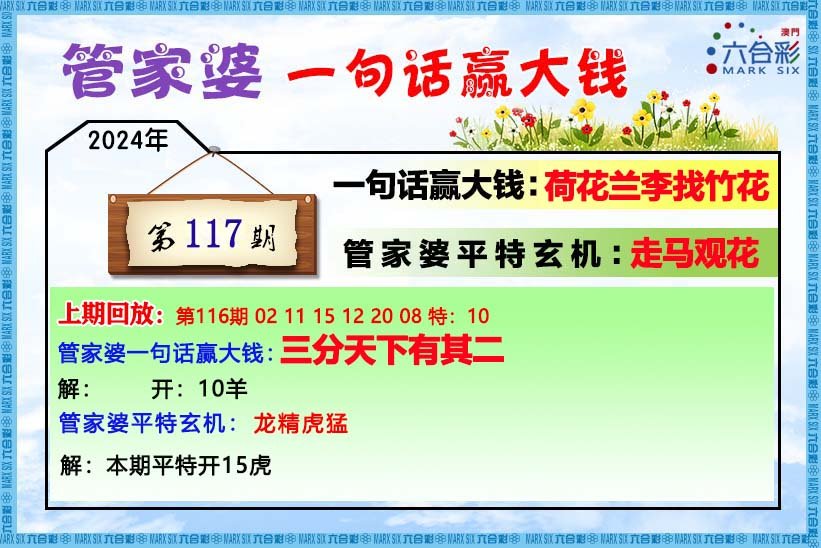

【管家婆一句话】

【六肖十八码】

【六肖中特】

【平尾心水秘籍】

澳门正版资料澳门正版图库

- 澳门四不像

- 澳门传真图

- 澳门跑马图

- 新挂牌彩图

- 另版跑狗图

- 老版跑狗图

- 澳门玄机图

- 玄机妙语图

- 六麒麟透码

- 平特一肖图

- 一字解特码

- 新特码诗句

- 四不像玄机

- 小黄人幽默

- 新生活幽默

- 30码中特图

- 澳门抓码王

- 澳门天线宝

- 澳门一样发

- 曾道人暗语

- 鱼跃龙门报

- 无敌猪哥报

- 特码快递报

- 一句真言图

- 新图库禁肖

- 三怪禁肖图

- 正版通天报

- 三八婆密报

- 博彩平特报

- 七肖中特报

- 神童透码报

- 内幕特肖B

- 内幕特肖A

- 内部传真报

- 澳门牛头报

- 千手观音图

- 梦儿数码报

- 六合家宝B

- 合家中宝A

- 六合简报图

- 六合英雄报

- 澳话中有意

- 彩霸王六肖

- 马会火烧图

- 狼女侠客图

- 凤姐30码图

- 劲爆龙虎榜

- 管家婆密传

- 澳门大陆仔

- 传真八点料

- 波肖尾门报

- 红姐内幕图

- 白小姐会员

- 白小姐密报

- 澳门大陆报

- 波肖一波中

- 庄家吃码图

- 发财波局报

- 36码中特图

- 澳门男人味

- 澳门蛇蛋图

- 白小姐救世

- 周公玄机报

- 值日生肖图

- 凤凰卜封图

- 腾算策略报

- 看图抓码图

- 神奇八卦图

- 新趣味幽默

- 澳门老人报

- 澳门女财神

- 澳门青龙报

- 财神玄机报

- 内幕传真图

- 每日闲情图

- 澳门女人味

- 澳门签牌图

- 澳六合头条

- 澳门码头诗

- 澳门两肖特

- 澳门猛虎报

- 金钱豹功夫

- 看图解特码

- 今日闲情1

- 开心果先锋

- 今日闲情2

- 济公有真言

- 四组三连肖

- 金多宝传真

- 皇道吉日图

- 澳幽默猜测

- 澳门红虎图

- 澳门七星图

- 功夫早茶图

- 鬼谷子爆肖

- 观音彩码报

- 澳门不夜城

- 挂牌平特报

- 新管家婆图

- 凤凰天机图

- 赌王心水图

- 佛祖禁肖图

- 财神报料图

- 二尾四码图

- 东成西就图

- 12码中特图

- 单双中特图

- 八仙指路图

- 八仙过海图

- 正版射牌图

- 澳门孩童报

- 通天报解码

- 澳门熊出没

- 铁板神算图

澳门正版资料人气超高好料

澳门正版资料免费资料大全

- 杀料专区

- 独家资料

- 独家九肖

- 高手九肖

- 澳门六肖

- 澳门三肖

- 云楚官人

- 富奇秦准

- 竹影梅花

- 西门庆料

- 皇帝猛料

- 旺角传真

- 福星金牌

- 官方独家

- 贵宾准料

- 旺角好料

- 发财精料

- 创富好料

- 水果高手

- 澳门中彩

- 澳门来料

- 王中王料

- 六合财神

- 六合皇料

- 葡京赌侠

- 大刀皇料

- 四柱预测

- 东方心经

- 特码玄机

- 小龙人料

- 水果奶奶

- 澳门高手

- 心水资料

- 宝宝高手

- 18点来料

- 澳门好彩

- 刘伯温料

- 官方供料

- 天下精英

- 金明世家

- 澳门官方

- 彩券公司

- 凤凰马经

- 各坛精料

- 特区天顺

- 博发世家

- 高手杀料

- 蓝月亮料

- 十虎权威

- 彩坛至尊

- 传真內幕

- 任我发料

- 澳门赌圣

- 镇坛之宝

- 精料赌圣

- 彩票心水

- 曾氏集团

- 白姐信息

- 曾女士料

- 满堂红网

- 彩票赢家

- 澳门原创

- 黃大仙料

- 原创猛料

- 各坛高手

- 高手猛料

- 外站精料

- 平肖平码

- 澳门彩票

- 马会绝杀

- 金多宝网

- 鬼谷子网

- 管家婆网

- 曾道原创

- 白姐最准

- 赛马会料

澳彩资料独家精准四肖

| 117期: ⑨肖 | 狗蛇鼠兔虎猴猪牛龙 | ????中 |

| 117期: ⑧肖 | 狗蛇鼠兔虎猴猪牛 | ????中 |

| 117期: ⑦肖 | 狗蛇鼠兔虎猴猪 | ????中 |

| 117期: ⑥肖 | 狗蛇鼠兔虎猴 | ????中 |

| 117期: ⑤肖 | 狗蛇鼠兔虎 | ????中 |

| 117期: ④肖 | 狗蛇鼠兔 | ????中 |

| 116期--长期跟踪,稳赚不赔! | ||

| 116期: ⑨肖 | 鼠猴蛇兔狗羊虎马龙 | 羊10中 |

| 116期: ⑧肖 | 鼠猴蛇兔狗羊虎马 | 羊10中 |

| 116期: ⑦肖 | 鼠猴蛇兔狗羊虎 | 羊10中 |

| 116期: ⑥肖 | 鼠猴蛇兔狗羊 | 羊10中 |

| 116期: ⑤肖 | 鼠猴蛇兔狗 | 羊10中 |

| 116期: ④肖 | 鼠猴蛇兔 | 羊10中 |

| 115期--长期跟踪,稳赚不赔! | ||

| 115期: ⑨肖 | 牛狗羊虎鼠猴猪龙蛇 | 鼠29中 |

| 115期: ⑧肖 | 牛狗羊虎鼠猴猪龙 | 鼠29中 |

| 115期: ⑦肖 | 牛狗羊虎鼠猴猪 | 鼠29中 |

| 115期: ⑥肖 | 牛狗羊虎鼠猴 | 鼠29中 |

| 115期: ⑤肖 | 牛狗羊虎鼠 | 鼠29中 |

| 115期: ④肖 | 牛狗羊虎 | 鼠29中 |

| 114期--长期跟踪,稳赚不赔! | ||

| 114期: ⑨肖 | 蛇羊鸡猴龙牛马鼠猪 | 猪18中 |

| 114期: ⑧肖 | 蛇羊鸡猴龙牛马鼠 | 猪18中 |

| 114期: ⑦肖 | 蛇羊鸡猴龙牛马 | 猪18中 |

| 114期: ⑥肖 | 蛇羊鸡猴龙牛 | 猪18中 |

| 114期: ⑤肖 | 蛇羊鸡猴龙 | 猪18中 |

| 114期: ④肖 | 蛇羊鸡猴 | 猪18中 |

| 113期--长期跟踪,稳赚不赔! | ||

| 113期: ⑨肖 | 蛇鸡马兔牛羊狗猪虎 | 蛇24中 |

| 113期: ⑧肖 | 蛇鸡马兔牛羊狗猪 | 蛇24中 |

| 113期: ⑦肖 | 蛇鸡马兔牛羊狗 | 蛇24中 |

| 113期: ⑥肖 | 蛇鸡马兔牛羊 | 蛇24中 |

| 113期: ⑤肖 | 蛇鸡马兔牛 | 蛇24中 |

| 113期: ④肖 | 蛇鸡马兔 | 蛇24中 |

| 112期--长期跟踪,稳赚不赔! | ||

| 112期: ⑨肖 | 龙牛蛇猴鼠兔虎狗马 | 狗43中 |

| 112期: ⑧肖 | 龙牛蛇猴鼠兔虎狗 | 狗43中 |

| 112期: ⑦肖 | 龙牛蛇猴鼠兔虎 | 狗43中 |

| 112期: ⑥肖 | 龙牛蛇猴鼠兔 | 狗43中 |

| 112期: ⑤肖 | 龙牛蛇猴鼠 | 狗43中 |

| 112期: ④肖 | 龙牛蛇猴 | 狗43中 |

| 111期--长期跟踪,稳赚不赔! | ||

| 111期: ⑨肖 | 羊兔龙马蛇猴猪牛狗 | 狗43中 |

| 111期: ⑧肖 | 羊兔龙马蛇猴猪牛 | 狗43中 |

| 111期: ⑦肖 | 羊兔龙马蛇猴猪 | 狗43中 |

| 111期: ⑥肖 | 羊兔龙马蛇猴 | 狗43中 |

| 111期: ⑤肖 | 羊兔龙马蛇 | 狗43中 |

| 111期: ④肖 | 羊兔龙马 | 狗43中 |

| 110期--长期跟踪,稳赚不赔! | ||

| 110期: ⑨肖 | 羊牛猴猪鼠虎蛇龙鸡 | 蛇48中 |

| 110期: ⑧肖 | 羊牛猴猪鼠虎蛇龙 | 蛇48中 |

| 110期: ⑦肖 | 羊牛猴猪鼠虎蛇 | 蛇48中 |

| 110期: ⑥肖 | 羊牛猴猪鼠虎 | 蛇48中 |

| 110期: ⑤肖 | 羊牛猴猪鼠 | 蛇48中 |

| 110期: ④肖 | 羊牛猴猪 | 蛇48中 |

| 109期--长期跟踪,稳赚不赔! | ||

| 109期: ⑨肖 | 猪鼠猴羊虎龙鸡牛狗 | 狗43中 |

| 109期: ⑧肖 | 猪鼠猴羊虎龙鸡牛 | 狗43中 |

| 109期: ⑦肖 | 猪鼠猴羊虎龙鸡 | 狗43中 |

| 109期: ⑥肖 | 猪鼠猴羊虎龙 | 狗43中 |

| 109期: ⑤肖 | 猪鼠猴羊虎 | 狗43中 |

| 109期: ④肖 | 猪鼠猴羊 | 狗43中 |